Nexos AI Credits allow you to use large language models directly inside OpenClaw without managing individual API keys or external integrations. When OpenClaw is connected to Nexos AI, all model usage is billed through credits instead of separate provider accounts.

This makes it much easier to get started, especially if you want to experiment with different AI models or avoid setting up multiple API keys.

Purchasing Nexos AI credits with OpenClaw

When deploying OpenClaw through the Hostinger Docker Catalog, you can now add Nexos AI Credits directly in the cart.

If you select Nexos AI Credits during checkout, the integration is handled automatically. OpenClaw will be connected to Nexos AI as soon as the container is deployed. There is no need to copy, paste, or manage API keys manually.

If Nexos AI Credits are not added during purchase, OpenClaw will still work, but you will need to configure your own AI provider credentials manually.

Automatic Nexos AI integration

When Nexos AI Credits are included with your OpenClaw order:

- OpenClaw is automatically authenticated with Nexos AI

- No API keys or environment variables are required

- Usage is billed against your Nexos AI Credits balance

- You can switch between supported AI models directly in the OpenClaw interface

This setup is especially useful for production environments where security and simplicity matter.

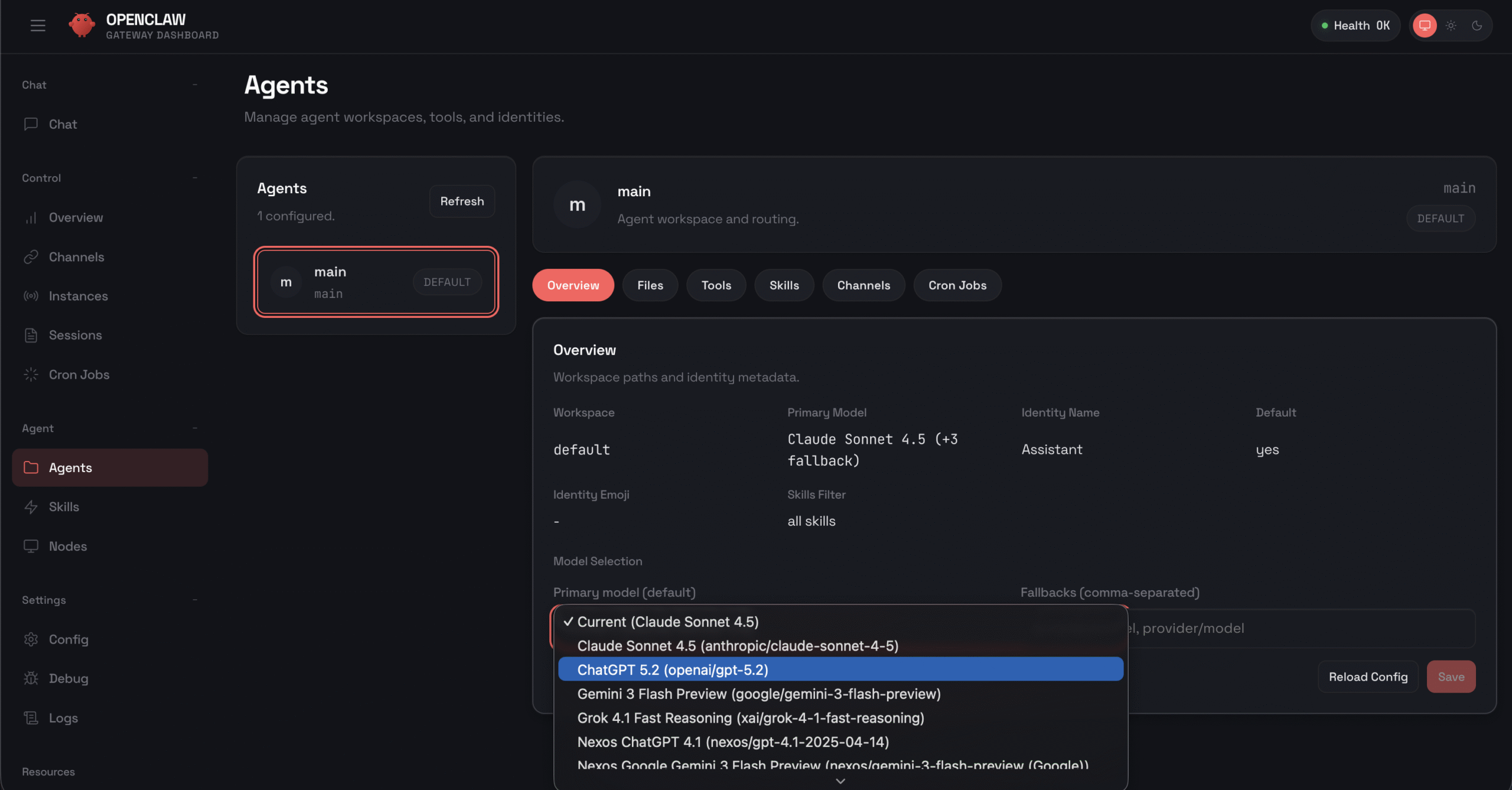

Selecting an AI model in OpenClaw

Once OpenClaw is deployed and connected to Nexos AI, you can choose which AI model your agent should use.

In the OpenClaw dashboard, open your agent configuration and locate the model selection dropdown. From there, you can select any supported Nexos AI model, such as Claude, ChatGPT, Gemini, or other available providers.

The model selection interface allows you to switch models at any time without redeploying OpenClaw. This makes it easy to balance cost, performance, and response quality depending on your use case.

Different models consume Nexos AI Credits at different rates.

You can find the full, up-to-date pricing for each model in the Nexos documentation.

How credit usage is calculated

Each AI model available through Nexos AI has its own credit cost based on factors such as model size, reasoning capabilities, and response quality. More advanced models consume credits faster, while lighter models are more cost-efficient for simple tasks.

Your credit balance is shared across all OpenClaw agents connected to Nexos AI.

Supported models and pricing

The following table lists the AI models available when using Nexos AI Credits with OpenClaw, along with their credit costs.

| Model | Input cost (per 1M tokens) | Output cost (per 1M tokens) | Cache creation (per 1M tokens) | Cached input (per 1M tokens) |

|---|---|---|---|---|

| Claude Sonnet 4.5 | 3.3 credits | 16.5 credits | 4.125 credits | 0.33 credits |

| Claude Opus 4.6 | 5.5 credits | 27.5 credits | 6.75 credits | 0.55 credits |

| Claude Opus 4 | 15 credits | 75 credits | 18.75 credits | 1.5 credits |

| Gemini 3 Flash | 0.5 credits | 3 credits | Not applicable | 0.05 credits |

| GPT-4.1 | 2.2 credits | 8.8 credits | Not applicable | 0.55 credits |

| Grok 4 | 3 credits | 15 credits | Not applicable | 0.75 credits |

What these costs mean

Input tokens are the text you send to the model, such as prompts, instructions, or conversation history.

Output tokens are the text generated by the model in response.

Cache creation applies when reusable context is stored to reduce future costs.

Cached input pricing is used when previously cached context is reused instead of being processed again.

More advanced models generally consume more credits, especially for output tokens, while lighter models like Gemini 3 Flash are more cost-efficient for simple or high-volume tasks.

Nexos AI Credits simplify the OpenClaw experience by removing manual API configuration and giving you access to multiple AI models out of the box. By adding credits during OpenClaw deployment, you can start using AI immediately and manage everything directly from the OpenClaw dashboard.