Feb 13, 2026

Alma

7min Read

OpenClaw costs range from approximately $6 to $200+ per month, depending on how the system is deployed and how intensively it runs. While OpenClaw itself is open source and free to install, operating it requires ongoing costs for server infrastructure and AI model usage.

The total cost of running OpenClaw depends on the VPS resources allocated, the language models connected, the frequency of automation triggers, and the level of usage monitoring as workflows scale.

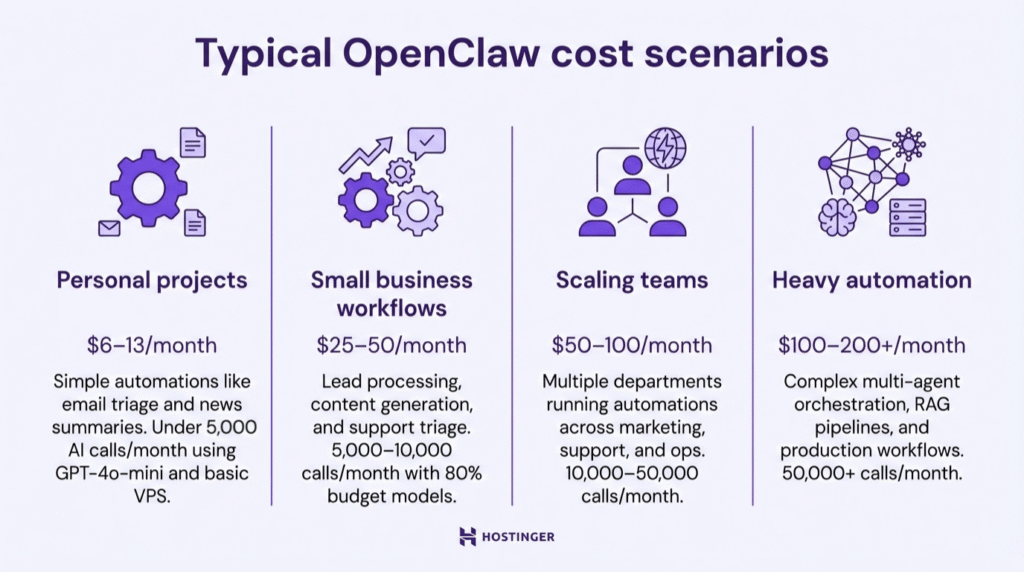

Most personal users fall in the $6–13 range, small teams typically spend $25–50, mid-sized or scaling teams land in the $50–100 range, and heavy-automation setups can push past $100 when processing thousands of AI interactions daily.

The good news? You control the key levers behind your OpenClaw costs.

OpenClaw is completely free software. It has no licensing fees, subscription costs, or built-in usage charges. It is released under the MIT license, which allows you to run and modify it without paying for the software itself.

The costs begin when you operate it. OpenClaw requires:

Many users misunderstand open source to mean zero cost. The software is free, but the operational expenses are not.

Hosting OpenClaw costs between $5 and $50+ per month for most deployments.

OpenClaw runs as a long-lived service. It must remain online to monitor triggers, process tasks, and execute workflows. That requires a VPS or dedicated server.

Server specifications directly affect pricing:

Light personal setups typically run on a basic VPS plan costing $5–10/month. Production environments with higher uptime guarantees, better isolation, and additional RAM often range between $15–40/month.

Cost is influenced by:

Production deployments justify stronger isolation and guaranteed performance, and dedicated resources, like Hostinger’s VPS plans, guarantee your OpenClaw instance never competes for resources.

You can learn more about OpenClaw setup instructions to match specs to your needs.

AI model usage is the highest variable cost of running OpenClaw. Most users spend between $1 and $150 per month on tokens, depending on model selection and workflow intensity.

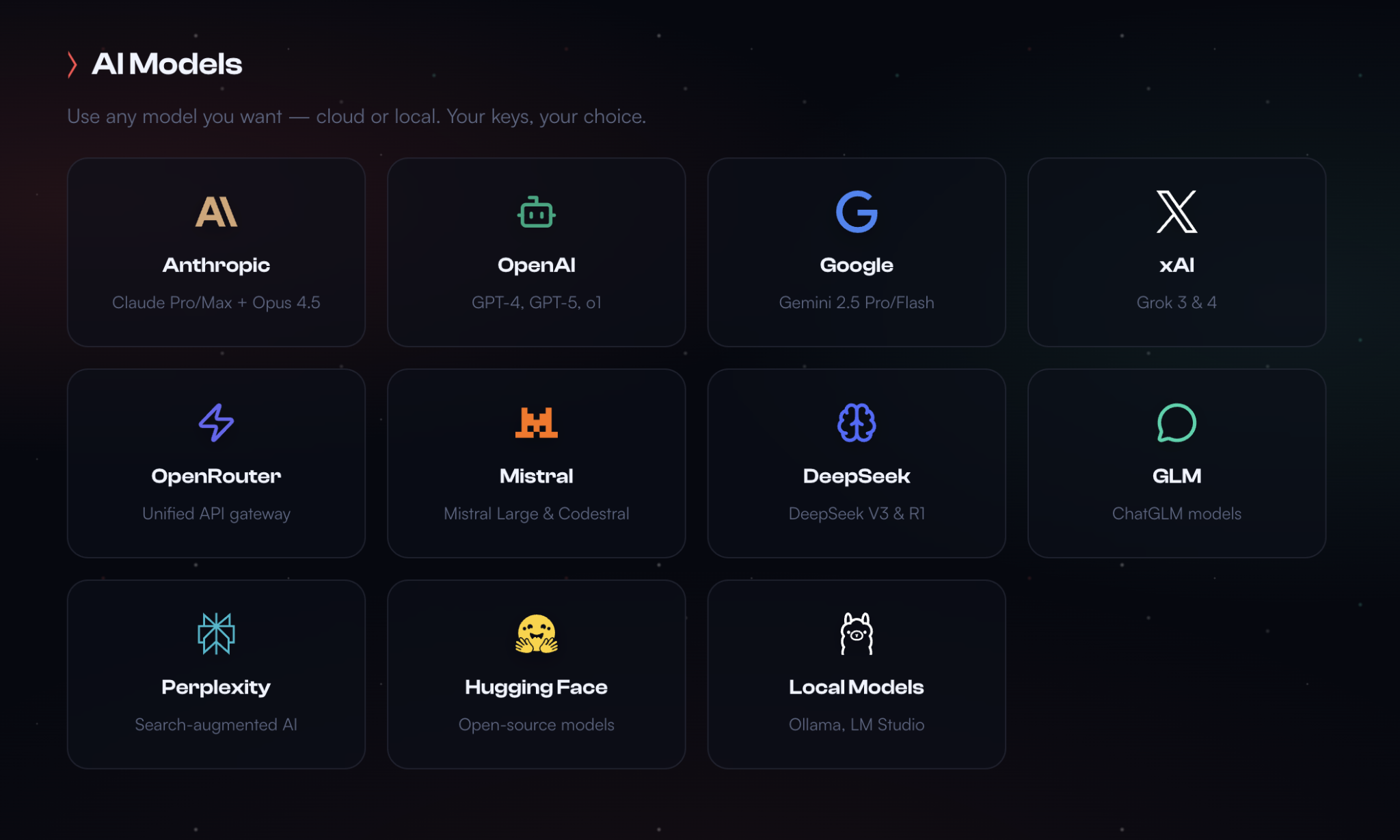

OpenClaw doesn’t include its own AI model. It connects to external language models from providers like OpenAI, Anthropic, Google, and others.

Every conversation, automation step, and decision OpenClaw makes triggers an API call to one of these models. That call consumes tokens.

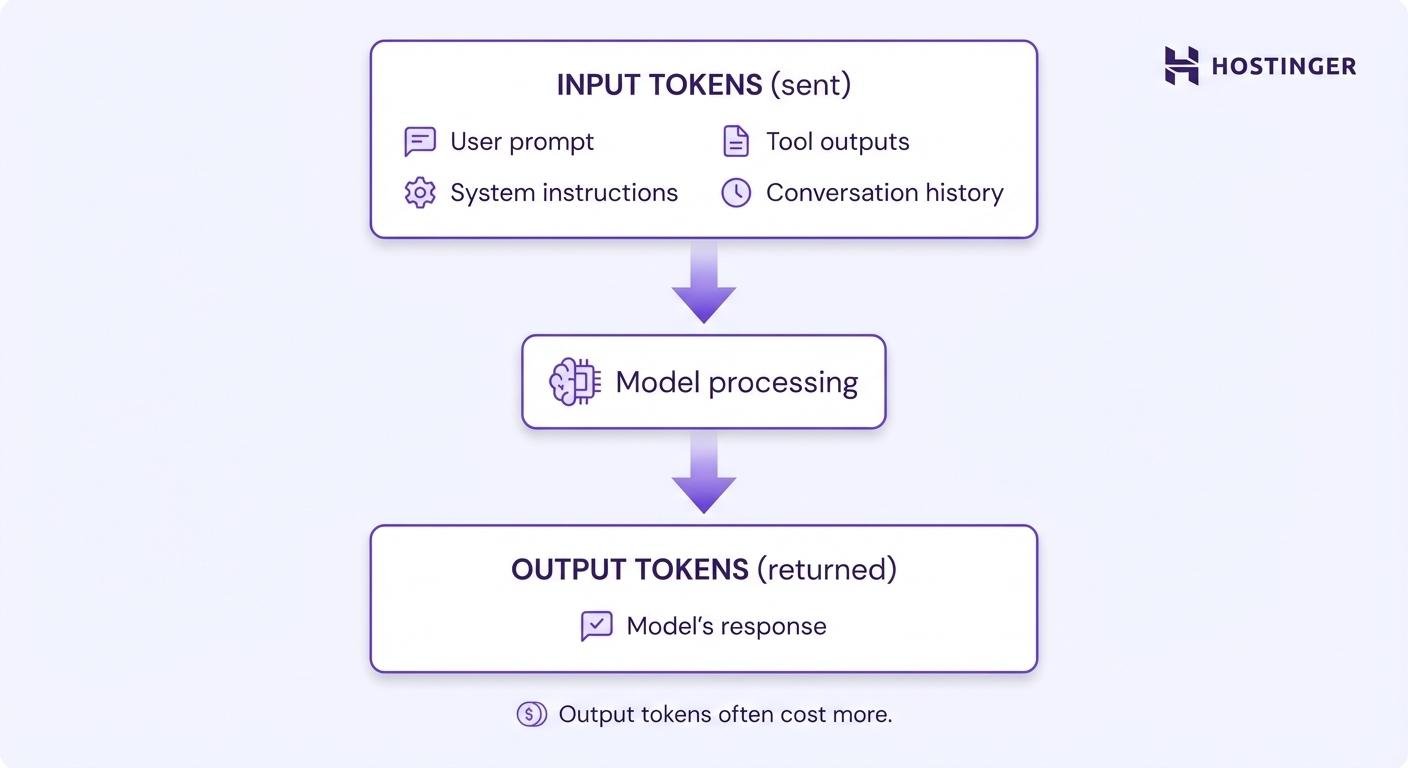

Tokens represent pieces of text. You pay separately for:

Output tokens usually cost 2–5× more than input tokens.

Here’s what current token pricing looks like for popular models:

Budget models (great for routine tasks):

Mid-tier models (balanced cost and performance):

Premium models (complex reasoning):

A typical OpenClaw interaction uses roughly 1,000 input tokens and 500 output tokens. That single call costs about $0.00045 with GPT-4o-mini or $0.0075 with GPT-4o.

Multiply by your usage frequency: 1,000 interactions per month runs $0.45 with the budget model versus $7.50 with the premium one.

Low-usage experiments–testing OpenClaw with a few dozen messages per week, running simple automations occasionally–cost under $1/month in tokens. Heavy automation scenarios with thousands of multi-step workflows, browser sessions, and complex reasoning can easily hit $50–150/month in API costs alone.

Model choice has a greater impact on cost than server size. Routing simple tasks to smaller models reduces spend significantly without sacrificing quality for routine operations.

Automation scope directly increases token usage and resource consumption because the more tasks you automate, the more AI calls OpenClaw makes, and the faster costs accumulate.

Each workflow trigger, each step in a multi-step automation, and each tool invocation potentially fires an API request.

High-cost patterns include:

Browser automation hits especially hard. While OpenClaw’s architecture documentation explains that the system reduces token usage by roughly 90% by parsing accessibility trees rather than sending screenshots, navigation still requires repeated model decisions.

File operations and parallel runs compound costs, too. If you set up OpenClaw to process batches of documents, generate multiple reports simultaneously, or monitor several messaging platforms at once, you’re multiplying the base cost by the number of parallel workflows.

Here’s the thing beginners often miss: Cost risk appears when workflows scale from testing to production. A task that triggers 10 times per day in testing may trigger 500 times per day once connected to live inputs.

Start small, monitor costs daily for the first week, then scale gradually.

You can explore different OpenClaw use cases to see which automation patterns fit your budget and business needs.

Running separate development and production environments roughly doubles your infrastructure costs, adding another $5–20/month for a test VPS plus the AI tokens consumed during development and debugging.

However, separate environments provide:

Skipping a development environment reduces cost but increases operational risk, so you’ll need a second server for testing changes before pushing them live.

If a misconfigured automation calls GPT-4 5,000 times instead of 50, you’d rather catch that in a dev environment with budget models than in production with premium ones. The safety is worth the added cost for anything business-critical.

The common trade-off is that solo developers and small projects often skip separate environments to save money, accepting the risk of breaking their production setup during updates.

A middle-ground approach uses production hardware but switches to cheaper AI models for testing.

Additionally, according to OpenClaw security best practices, isolating test environments helps prevent accidental exposure of production credentials, real customer data, or live integrations during development.

With a cost‑optimized setup, OpenClaw costs $6–13/month for personal projects, $25–50/month for small business workflows, $50–100/month for scaling teams, and $100–200+/month for heavy operations, depending on usage patterns and model selection.

Here’s what scenarios look like with current pricing:

Personal projects (under 5,000 AI calls/month)

You’re running simple automations–email triage, daily news summaries, occasional web research. With GPT-4o-mini as your primary model and a basic VPS, you’ll spend roughly $6–13/month total.

That breaks down to RM19.99/month for Hostinger KVM 1 hosting, plus $1–6 in AI tokens depending on usage intensity. This is cheaper than a single Zapier Professional subscription.

Small business workflows (5,000–10,000 calls/month)

You’re running lead processing, content generation, CRM syncing, and customer support triage across a small team. Using a mix of 80% budget models and 20% mid-tier models for complex tasks, expect $25–50/month.

Most of that cost comes from AI tokens ($15–35), with server costs around $7–15 depending on performance needs.

Scaling teams (10,000–50,000 calls/month)

You’re running multiple departments on OpenClaw–marketing, support, internal ops–with several automations per team and regular browser steps. With a mix of 60–80% budget models and 20–40% mid‑tier models, monthly costs land in the $50–100 range.

Most of that comes from AI tokens ($35–80), with infrastructure in the $10–20 range for 2–4 vCPU servers and 8–16 GB RAM.

Heavy automation (50,000+ calls/month)

You’re running complex multi-agent orchestration, RAG pipelines, extensive browser automation, and production workflows. Heavy usage requires 4–8 vCPU servers with 16+ GB RAM. Your monthly bill runs $100–200+, with $80–150 in AI costs and $15–25 in infrastructure.

Strategic model routing (using budget models for routine tasks, premium models only when needed) keeps this manageable.

The variability comes from model choice more than anything else. Switching from GPT-4o to GPT-4o-mini for 80% of your calls can cut costs by 60–80%, with minimal impact on quality for simple tasks.

Hidden or often overlooked costs for OpenClaw include backups, storage growth, monitoring tools, and idle automations that silently drain your budget. These expenses sneak up on people because they’re easy to miss during initial setup:

OpenClaw costs remain predictable when usage is monitored, and automation expands gradually.

Practical strategies include:

Exciting news for new OpenClaw users! For a limited time, we’re doubling your AI credits on your first purchase:

Buy 5 AI Credits — Get 5 Free (10 total)

Buy 20 AI Credits – Get 20 Free (40 total)

No extra steps required, credits are automatically added to eligible purchases when selecting nexos.ai AI credits.

Hostinger’s one-click OpenClaw deployment and integrated AI credits let you go from zero to running autonomous AI agents in under 5 minutes, switch between Claude, ChatGPT, and Gemini without redeploying or reconfiguring API keys, and manage both infrastructure and AI usage from a single dashboard, with transparent, controllable costs.

More advanced users who prefer managing their own API keys can still do so–OpenClaw’s configuration file supports 20+ providers.

For comprehensive strategies on running OpenClaw efficiently, check out OpenClaw best practices.

All of the tutorial content on this website is subject to Hostinger's rigorous editorial standards and values.