Jan 21, 2026

Aris S. & Matleena S.

9min Read

AI search optimization is the practice of adapting your content and technical SEO so generative AI systems – like ChatGPT and Google AI Overviews – can easily process, parse, and cite it.

Unlike traditional search engines, which feature pages mostly based on keywords and backlinks, generative AI tools consider content’s meaning, context, and authority to generate direct answers.

To optimize for AI search, you’ll need to:

Explore AI search optimization in detail, including how it works, its authority signals, and how to improve your content for it.

AI search optimization works by adapting your content and technical search engine optimization (SEO) to allow AI systems to process information.

Traditional search engines primarily rank pages based on keyword matching and linking. Meanwhile, generative AI agents and tools with search capability use multi-step processes to synthesize a comprehensive answer based on the meaning behind a user’s query.

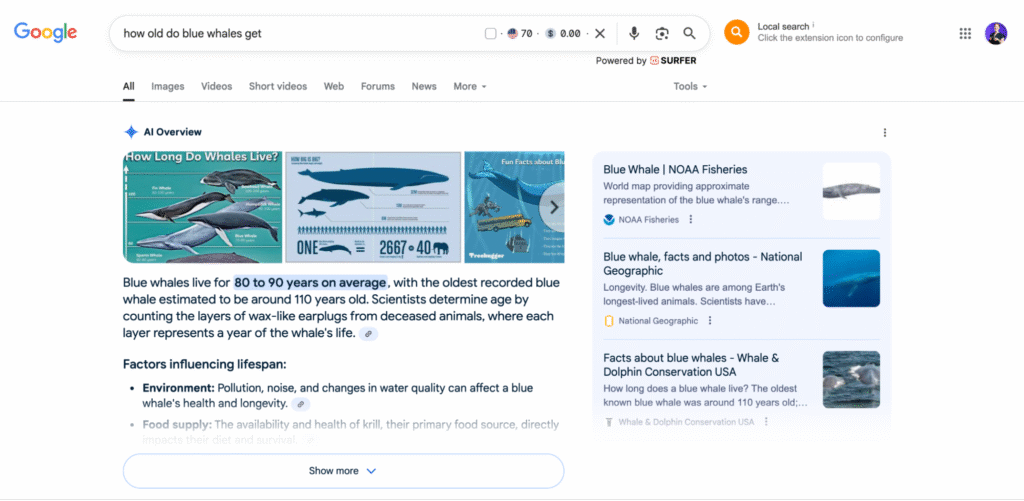

For instance, for the query “How old do blue whales get,” the AI overview compiles information about the whale’s age from various sources and summarizes it to search query directly:

When a user submits a conversational query, an AI search engine follows a specific process to generate an answer:

This process is designed to support an ongoing conversation, where the AI remembers previous exchanges and users can ask natural follow-up questions.

Therefore, your content must be structured to answer those follow-up questions and connect related topics smoothly.

AI search optimization also emphasizes the content’s credibility and trustworthiness, as more authoritative means higher relevancy to queries. This is where Google’s E-E-A-T (Experience, Expertise, Authority, and Trustworthiness) framework becomes a core standard for evaluating content quality.

Similar to developing an SEO-friendly website, there are several signals to pay attention to when improving authority for AI search engines:

To optimize for AI search, you must go beyond traditional SEO practices and focus on creating content and a site infrastructure that AI can easily understand and trust.

AI models prefer content that is easy to parse and focused on answering user intent directly. Here’s how to create such content:

Structured data is a method of labeling content to help search engines and AI systems understand it better. This enables them to present information in a way that more accurately answers user queries, such as formatting it as a rich result.

Here’s how you can structure your content properly:

AI search engines use NLU to analyze both the context and intent behind a query.

By directly targeting user intents, your content will answer their search queries more efficiently and precisely, improving the likelihood of AI search engines citing your website.

Here’s how to optimize for different user intents.

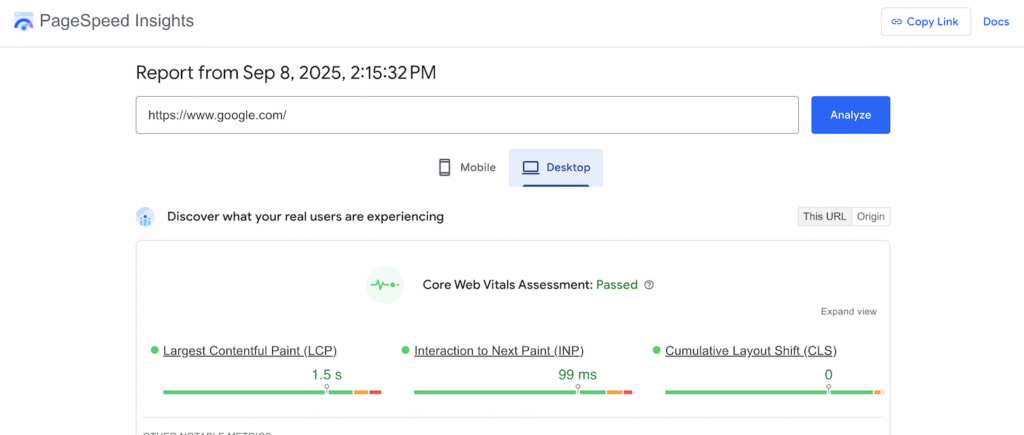

A fast, mobile-friendly website is a trust signal for users and AI search engines. It also helps AI crawlers parse and process your content more efficiently, improving the chance of being displayed to users.

To ensure your website performs well, use tools like Google PageSpeed Insights to check its page loading speed and identify areas for improvement. Gaining these insights is important to determine the actionable website optimization strategy to address performance-related issues.

Also, your website must look and work properly on mobile devices. In addition to catering to a broader range of users, maintaining mobile-friendliness also contributes to a better user experience, which signals to AI that it’s a reputable website.

Some of the strategies for making your website mobile-friendly include strategically placing interactive elements and minimizing visual clutter that can affect usability on the smaller screens of mobile devices.

Building authority is crucial for getting your content cited by AI. Practices to improve the trustworthiness of your site include:

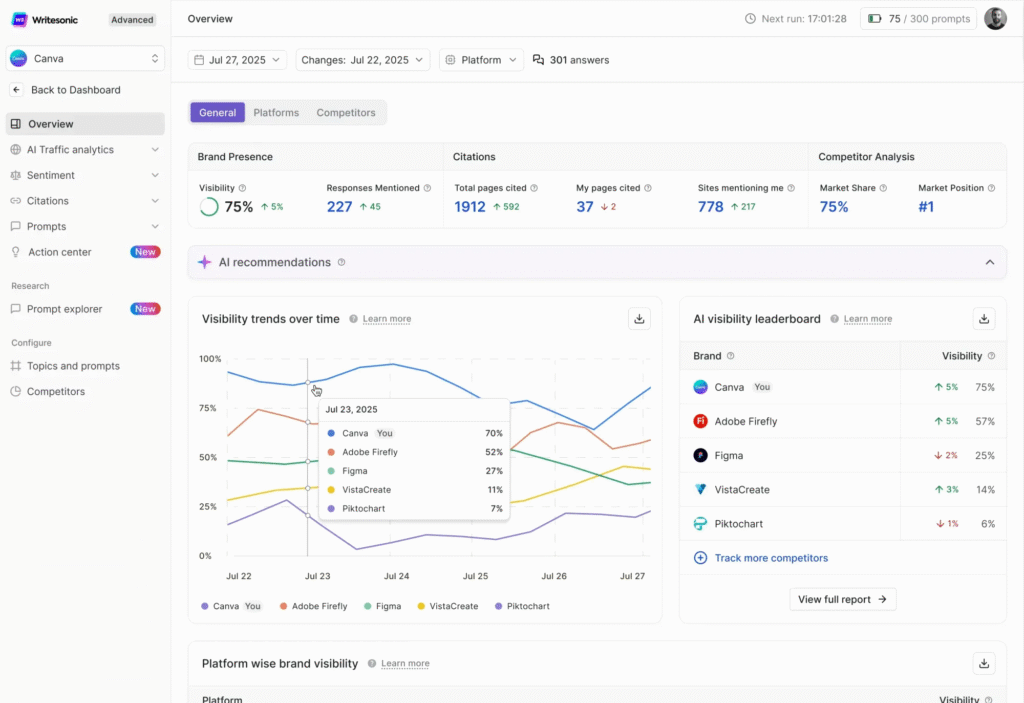

Traditional analytics tools often don’t capture AI crawler activity. You need specialized tools to measure your AI overview performance.

Platforms like Writesonic’s GEO tool can provide comprehensive insights into how AI crawlers interact with your content in real-time. Use them to:

Clean HTML helps AI crawlers parse and process your content more effectively since they understand what each section is about.

To do this, use semantic HTML5 tags like <article>, <section>, <header>, and <footer> to logically structure your page. This helps AI understand the different parts of your content and their relationships.

Then, organize sections in your content using clear H1, H2, and H3 to help the AI understand the hierarchy of information and relevance to subqueries. This increases the likelihood of your content being featured in AI-generated summaries.

Your robots.txt file is the primary tool for controlling which search engine crawlers can access your site. While AI crawlers are not blocked by default, you must explicitly allow or block them.

This provides a more granular control and ensures that the AI you wish to crawl your website can access your content. Limiting the crawlers that parse your content also helps minimize server load, as they add large amounts of traffic to your site.

When writing robots.txt, avoid using a blanket disallow directive and provide specific instructions for each AI crawler. This would prevent services like Google Search from indexing your site, harming traditional SEO.

Here’s a good example of a robots.txt snippet:

User-agent: GPTBot Allow: / User-agent: PerplexityBot Allow: /

The new llms.txt standard is a simple file written in Markdown that provides a curated, AI-optimized map of your website. It gives a concise table of contents for large language models (LLMs), helping them extract and retrieve relevant information within their token limits.

You can create llms.txt by simply creating a Markdown file with that name. Then, include a project title, a short summary, and organized sections with curated links to your most important content.

Here’s an example of llms.txt structure:

# My Awesome Website > This is a website about creating awesome websites. ## Blog * [Getting Started with Web Hosting](https://example.com/blog/getting-started) * [How to Use the Hostinger Website Builder](https://example.com/blog/website-builder) ## Products * [Web Hosting Plans](https://example.com/hosting-plans) * [Domain Checker](https://example.com/domain-checker)

Then, you can validate your llms.txt using our online llms.txt validator and check whether it is embedded properly into your website by accessing this URL, with domain.com being your actual domain name:

domain.com/llms.txt

If you develop your website using Hostinger Horizons, the llms.txt is automatically generated when you publish your project to ensure AI accessibility by default.

Technical and authority factors are essential for AI search optimization because they help AI systems determine if your content is both accessible and trustworthy.

Technical optimizations involve ensuring your website is structured and configured in a way that AI crawlers can easily understand and process. For example, a fast-loading, mobile-friendly site provides a strong signal of quality and technical soundness.

Similarly, authority-building activities are crucial for proving to AI that your content is credible and reliable. AI models rely on signals like backlinks from reputable sources and brand mentions to establish trust.

By combining a solid technical foundation with strong authority signals, you can increase the likelihood of your content being cited and synthesized into direct answers by AI.

Technical SEO for AI means ensuring your site is easily accessible and readable by AI crawlers. This goes beyond traditional SEO practices and focuses on how AI bots behave.

Adapting to AI search is not just about keeping up with trends; it’s essential for future-proofing your online presence. Here are several benefits of optimizing for AI:

Remember that although GEO and AI search optimization are important, they won’t completely replace traditional SEO. Instead, they will work together and complement each other to ensure your content is helpful for users.

As AI models become more sophisticated, they will get better at understanding complex queries and synthesizing information. This will put an even greater emphasis on creating high-quality, semantically rich content.

New types of AI agents that can perform complex, multi-step tasks for users will also emerge. Your content strategy will need to adapt to these agents by providing clear, actionable instructions and a well-structured site map.

Moreover, the rapid rise of voice searches using assistants like Siri means your content must be optimized for how people naturally speak. This reinforces the need for a conversational tone and direct answers.

The best way to prepare for the future is to focus on creating great content for humans first, and then structure it for AI second. We recommend checking out our other tutorial to learn more about using AI for your website.